Оперативная память

Творческое эссе

Название дисциплины: Операционные системы

Тема: Оперативная память

Оперативная память является одним из главных компонентов компьютера, без нее работа системы невозможна. Объем и характеристики установленной в системе оперативной памяти напрямую влияют на скорость работы компьютера. Давайте выясним на простом потребительском уровне, какая она бывает и зачем вообще нужна в компьютере.

Как уже понятно из названия, оперативная память компьютера или ОЗУ (оперативное запоминающее устройство) на компьютерном жаргоне «оперативка», а так же просто «память» служит для оперативного (временного) хранения данных необходимых для работы. Однако такое объяснение не до конца понятно, что значит временного и зачем их хранить в оперативке, когда есть жесткий диск. Проще всего ответить на вопрос, что значит для временного хранения данных. Конструкция оперативной памяти выполнена таким образом, что данные в ней сохраняются только, пока на нее подается напряжение, поэтому она является энергозависимой памятью в отличие от жесткого диска. Выключение компьютера, перезагрузка очищают оперативную память и все данные, находящиеся в ней в этот момент удаляются. Даже кратковременный перебой в подаче напряжения на планки памяти способен обнулить их или вызвать повреждение отдельной части информации. Другими словами оперативная память компьютера хранит загруженные в нее данные максимум в пределах одного сеанса работы компьютера.

Итак, оперативная память служит буфером между центральным процессором и винчестером. Жесткий диск энергонезависимый и хранит всю информацию в компьютере, но расплатой за это является его медленная скорость работы. Если процессор брал бы данные напрямую с жесткого диска компьютера, он работал бы как черепаха. Решением данной проблемы служит применение дополнительного буфера между ними в виде оперативной памяти.

Память энергозависима и требует подачи постоянного питания для своей работы, зато она в разы быстрее. Когда процессору требуются какие то данные, эти данные считываются с винчестера и загружаются в оперативку и все дальнейшие операции с ними происходят в ней. По завершении работы с ними, если результаты нужно сохранить, то они отправляются обратно на жесткий диск для записи на него, а из оперативной памяти они удаляются, чтобы освободить место для других данных. Если результаты сохранять не нужно, оперативная память компьютера просто очищается.

Так в сильно упрощенном виде выглядит их взаимодействие. Помимо центрального процессора информация из ОЗУ может потребоваться и другим компонентам, например, видеокарте. Естественно одновременно в памяти хранится множество данных, поскольку все программы, которые вы запускаете или открываемые вами файлы загружаются в нее. Файлы браузера, через который вы смотрите сейчас этот сайт, а так же сама интернет-страница находятся именно в оперативной памяти.

Стоит отметить, что данные с жесткого диска именно копируются в оперативку, поэтому пока изменения сделанные с ними не будут сохранены обратно на диск, там будет оставаться их старая версия. Именно по этой причине открыв, например вордовский файл и внеся в него какие то изменения в редакторе, вам требуется в конце выполнить сохранение, при этом файл загружается обратно на жесткий диск и перезаписывает хранящийся там.

Различные компоненты компьютера взаимодействуют между собой не напрямую, а через различные интерфейсы, так для обмена информацией между процессором и ОЗУ используется системная шина.

Производительность всего компьютера зависит от скорости работы всех его составляющих и самое медленное из них будет бутылочным горлышком тормозящим работу всей системы. Появление оперативной памяти существенно увеличило скорость работы, но не решило всех проблем. Во-первых, скорость работы ОЗУ не идеальна, а во-вторых соединительные интерфейсы тоже имеют ограничения по пропускной способности.

Дальнейшее развитие техники привело к тому, что в устройства требующие высокой скорости обработки данных стали встраивать собственную память, этим устраняются издержки на передачу данных туда-обратно и обычно в таких случаях используется более скоростная память чем в применяемая в ОЗУ. Примером может служить видеоадаптер, встроенный кэш центрального процессора и так далее. Даже многие винчестеры имеют сейчас свой внутренний высокоскоростной буфер, позволяющий ускорить операции чтения/запись. Ответ на вопрос, почему эта высокоскоростная память не используется сейчас в качестве оперативной очень простой, некоторые технические сложности, но главное ее дороговизна.

На данный момент времени, существует два типа памяти возможных к применению в качестве оперативной памяти в компьютере. Оба представляют собой память на основе полупроводников с произвольным доступом. Другими словами, память позволяющая получить доступ к любому своему элементу (ячейке) по её адресу.(Static random access memory) — изготавливается на основе полупроводниковых триггеров и имеет очень высокую скорость работы. Основных недостатков два: высокая стоимость и занимает много места. Сейчас используется в основном для кэша небольшой емкости в микропроцессорах или в специализированных устройствах, где данные недостатки не критичны. Поэтому в дальнейшем мы её рассматривать не будем.(Dynamic random access memory) — память наиболее широко используемая в качестве оперативной в компьютерах. Построена на основе конденсаторов, имеет высокую плотность записи и относительно низкую стоимость. Недостатки вытекают из особенностей её конструкции, а именно, применение конденсаторов небольшой емкости приводит к быстрому саморазряду последних, поэтому их заряд приходится периодически пополнять. Этот процесс называют регенерацией памяти, отсюда возникло и название динамическая память. Регенерация заметно тормозит скорость ее работы, поэтому применяют различные интеллектуальные схемы стремящиеся уменьшить временные задержки.

Развитие технологий идет быстрыми темпами и совершенствование памяти не исключение. Компьютерная оперативная память, применяемая в настоящее время, берет свое начало с разработки памяти DDR SDRAM. В ней была удвоена скорость работы по сравнению с предыдущими разработками за счет выполнения двух операций за один такт (по фронту и по срезу сигнала), отсюда и название DDR (Double Data Rate). Поэтому эффективная частота передачи данных равна удвоенной тактовой частоте. Сейчас ее можно встретить практически только в старом оборудовании, зато на её основе была создана DDR2 SDRAM.

В DDR2 SDRAM была вдвое увеличена частота работы шины, но задержки несколько выросли. За счет применения нового корпуса и 240 контактов на модуль, она обратно не совместима с DDR SDRAM и имеет эффективную частоту от 400 до 1200 МГц.

Сейчас наиболее распространённой памятью является третье поколение DDR3 SDRAM. За счет технологических решений и снижения питающего напряжения удалось снизить энергопотребление и поднять эффективную частоту, составляющую от 800 до 2400 МГц. Несмотря на тот же корпус и 240 контактов, модули памяти DDR2 и DDR3 электрически не совместимы между собой. Для защиты от случайной установки ключ (выемка в плате) находится в другом месте.является перспективной разработкой, которая в ближайшее время придет на смену DDR3 и будет иметь пониженное энергопотребление и более высокие частоты, до 4266 МГц.

Наряду с частотой работы, большое влияние на итоговую скорость работы оказывают тайминги. Таймингами называются временные задержки между командой и её выполнением. Они необходимы, чтобы память могла «подготовиться» к её выполнению, в противном случае часть данных может быть искажена. Соответственно, чем меньше тайминги (латентность памяти) тем лучше и следовательно быстрее работает память при прочих равных. Количество памяти, которое можно установить в компьютер зависит от материнской платы. Объем памяти ограничивается как физически количеством слотов для её установки, так и в большей мере программными ограничениями конкретной материнской платы или установленной операционной системы компьютера.

В общем случае для просмотра интернета и работы в офисных программах достаточно 2 Гб, если вы играете в современные игры или собираетесь активно редактировать фотографии, видео или использовать другие требовательные к объему памяти программы, то объем установленной памяти следует повысить как минимум до 4 Гб.

Следует иметь в виду, что в настоящее время операционные системы Windows выпускаются в двух вариантах: 32-битная (x32) и 64-битная (x64). Максимальный объем доступный операционной системе в 32-битных версиях в зависимости от различных комбинаций комплектующих примерно от 2,8 до 3,2 Гб, то есть даже если вы установите в компьютер 4 Гб, система будет видеть максимум 3,2 Гб. Причина этого ограничения появилась на заре появления операционных систем, когда о таких объемах памяти никто даже в самых радужных мечтах бы не подумал. Существует способы позволить 32-битной системе работать с 4 Гб памяти, но это все «костыли» и не на всех конфигурациях работают.

Так же Windows 7 Начальная Starter имеет только 32-битную версию и ограничена максимальным объемом оперативной памяти в 2 Гб.

Список использованных интернет-ресурсов

оперативный память компьютер

1.https://ru.wikipedia.org/wiki/%CE%EF%E5%F0%E0%F2%E8%E2%ED%E0%FF_%EF%E0%EC%FF%F2%FC

2.#»justify»>3.http://smartronix.ru/chto-takoe-operativnaya-pamyat

pin packages (SIPPs). Memory pcb’s also operate on a

request basis, but unlike memory modules, there is not

a priority sequence to go through. The request is made

by requestor, the control circuitry selects either a read

or a write operation, and the timing circuitry initiates

the read and/or write operations.

Memory Architecture

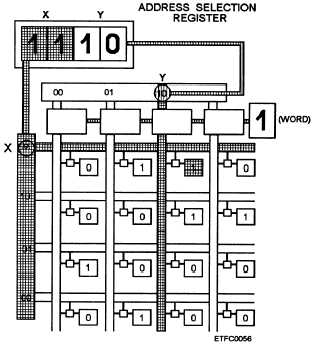

The memory architecture, regardless of the

memory type, is consistent. Memories are typically

organized in square form so they have an equal number

of rows (x) and columns (y) (fig. 6-4). Each intersection

of a row and column comprises a memory word

address. Each memory address contains a memory

word. The selected memory address can contain one

or more bits. But for speed and practicality, for a given

computer design, the word size typically relates to the

CPU and is usually the size of its registers in bits. Word

sizes typically range in increments of 8, 16, 32, or 64

bits. Figure 6-5 represents an address with an 8-bit

word. The methods used for the arrangement of the

rows and columns vary in a given type of memory. The

rows and columns are arranged in arrays, memory

planes, or matrices.

MEMORY OPERATIONS

Memories operate on a request, selection, and

initiate basis. A memory request or selection and a

memory word location are transmitted from the

Figure 6-4.—Row (X), column (Y) organization.

requestor (CPU or I/O sections) to the memory section.

The computer’s internal bus system transmits the

memory request or selection and location to the

memory section. The memory operations, regardless of

the computer type, share some basic commonalities.

Key events must occur to access and store data in

memory. Some items only occur with certain types of

memories, and we discuss these as you study each

different type of memory. We also discuss the items that

are common to most memories: control circuits,

timing circuits, and memory cycle. In addition, we

present methods used for detecting faults and

protecting memory.

Memory Interface Circuits

The memory interface circuits include all the lines

of communication (buses) and the interfacing register

between the requester (CPU or I/O(C)) and memory.

The communications lines include some of the

following:

Data (bidirectional bus)

Control lines (write byte and interleave [for large

computers])

Memory request

Read and write enables

Data ready

Data available

The interface (data) register (often designated as

the “Z” register) functions as the primary interfacing

component of memory. Before the read/write

operation, this register transfers the selected memory

address to the address register. All data entering and

leaving the memory is temporarily held in this data

register. In a write operation, this register receives data

from the requester; and in a read operation, this register

transmits data to the requester. For computers with

destructive readout, it routes the data back to memory

to be rewritten.

Control Circuits

The control circuits set up the signals necessary to

control the flow of data and address words in and out of

memory. They screen the request or selection by units

external to memory—the CPU and/or IO(C).

Depending on the computer type, some of the more

common uses of the control circuits include:

6-4

OpenCL runtime and concurrency model

David Kaeli, … Dong Ping Zhang, in Heterogeneous Computing with OpenCL 2.0, 2015

5.1.1 Blocking Memory Operations

Blocking memory operations are perhaps the most commonly used and easiest to implement method of synchronization. Instead of querying an event for completion, and blocking the host’s execution until the memory operation has completed, most memory transfer functions simply provide a parameter that enables synchronous functionality. This option is the blocking_read parameter in clEnqueueReadBuffer(), and has synonymous implementations in the other data transfer application programming interface (API) calls.

-

cl_int

-

clEnqueueReadBuffer(

-

cl_command_queue command_queue,

-

cl_mem buffer,

-

cl_bool blocking_read,

-

size_t offset,

-

size_t size,

-

const void *ptr,

-

cl_uint num_events_in_wait_list,

-

const cl_event *event_wait_list,

-

cl_event *event)

Enabling the synchronous functionality of a memory operation is commonly used when transferring data to or from a device. For example, when transferring data from a device to the host, the host should not try to access the data until the transfer is complete as the data will be in an undefined state. Therefore, the blocking_read parameter can be set to CL_TRUE to ensure that the transfer is complete before the call returns. Using this synchronous functionality allows host code that utilizes the data to be placed directly after the call with no additional synchronization steps. Blocking and nonblocking memory operations are discussed in detail in Chapter 6.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128014141000053

Instruction Scheduling

Keith D. Cooper, Linda Torczon, in Engineering a Compiler (Second Edition), 2012

12.3.2 Scheduling Operations with Variable Delays

Memory operations often have uncertain and variable delays. A load operation on a machine with multiple levels of cache memory might have an actual delay ranging from zero cycles to hundreds or thousands of cycles. If the scheduler assumes the worst-case delay, it risks idling the processor for long periods. If it assumes the best-case delay, it will stall the processor on a cache miss. In practice, the compiler can obtain good results by calculating an individual latency for each load based on the amount of instruction-level parallelism available to cover the load’s latency. This approach, called balanced scheduling, schedules the load with regard to the code that surrounds it rather than the hardware on which it will execute. It distributes the locally available parallelism across loads in the block. This strategy mitigates the effect of a cache miss by scheduling as much extra delay as possible for each load. It will not slow down execution in the absence of cache misses.

Figure 12.4 shows the computation of delays for individual loads in a block. The algorithm initializes the delay for each load to one. Next, it considers each operation i in the dependence graph D for the block. It finds the computations in D that are independent of i, called Di. Conceptually, this task is a reachability problem on D. We can find Di by removing from D every node that is a transitive predecessor of i or a transitive successor of i, along with any edges associated with those nodes.

Figure 12.4. Computing Delays for Load Operations.

The algorithm then finds the connected components of Di. For each component C, it finds the maximum number N of loads on any single path through C. N is the number of loads in C that can share operation i‘s delay, so the algorithm adds delay(i) / N to the delay of each load in C. For a given load l, the operation sums the fractional share of each independent operation i‘s delay that can be used to cover the latency of l. Using this value as delay(l) produces a schedule that shares the slack time of independent operations evenly across all loads in the block.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780120884780000128

High-Level Synchronization Tools

Victor Alessandrini, in Shared Memory Application Programming, 2016

9.11.3 Comment on Memory Operations

The previous example involves memory operations, and it seems to contradict the conclusions of Chapter 7 concerning memory coherency: exclusive writes are naturally protected by a mutex, but read operations must lock the same mutex to make sure they capture the last written value to the memory location involved. Looking back to Figure 9.6, it is clear that read operations are not locking anything: otherwise, they could not act concurrently. Therefore, we may ask: how do we know that memory consistency is guaranteed for memory operations?

The answer is that the write and the read sectors in Figure 9.6 are separated by memory fences located at boundary points A, B, C, D, and E, provided by an internal mutex. This guarantees that memory will be coherent every time the read operations start.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128037614000095

Subliminal Perception or “Can We Perceive and Be Influenced by Stimuli That Do Not Reach Us on a Conscious Level?”

Andreas Riener, in Emotions and Affect in Human Factors and Human-Computer Interaction, 2017

Models of Human Memory

To better understand human memory operation, several models were developed and used to interpret how we make decisions, react on external stimuli, or why we refrain an action due to overlooking of information. Before discussing models more thoroughly, we will look at the basics of information processing.

Information processing in humans is measured in terms of time units (seconds) and information units (bits). The information unit “1 bit” is allocated to each decision in a dichotomous partition on a “yes/no” or “true/false” basis (Lehrl and Fischer, 1988). The grand information processing capacity of the human mind has the potential to handle about 11 million bits/s (Norretranders, 1997) (Fig. 19.2), but due to the inherent physical limitations of information processing, the number of bits processed consciously is much lower. In (Norretranders, 1997), an (optimistic) maximum of about 50 bits/s was indicated, but the exact number of bits to be processed explicitly (or consciously) actually depends on the task. Our explicit information processing speed can be reduced to as little as 45 bits/s when reading silently, 40 bits/s while using spoken speech, 30 bits/s when reading aloud, and 12 bits/s when executing calculations in our head (Hassin et al., 2005, p.82). Tactile information is processed at approximately 2–56 bits/s (Mandic et al., 2000). According to Overgaard and Timmermans (2010), “we may not even be able to experience all that we perceive.” This coincides with our own observations in user studies, where we found that race car drivers (or other highly experienced drivers) are likely to be better equipped to process information and adapt steering behavior using a minimum of cognitive resources than less trained, regular drivers (Riener, 2010, pp.138).

Figure 19.2. Information flow in humans.

From a total of about 11 millions bits/s, only around 16 bits are perceived consciously. I1, Seeing, I2; Hearing; I3, Touching; I4, Temperature; I5, Muscles; I6, Smelling; I7, Sense of taste; O1, Skeleton; O2, Hand; O3, Speechmuscle; O4, Face.

Thus, compared to our total theoretical capacity, information processed consciously constitutes only a small fraction of all incoming information. The remainder is apparently processed without active awareness (or lost). The availability of such a large pool of processable information and the high speed at which information is processed further motivates us to explore making vital information accessible via subliminal routes in cognitive workload-sensitive settings.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128018514000197

Code Shape

Keith D. Cooper, Linda Torczon, in Engineering a Compiler (Second Edition), 2012

7.9.2 Saving and Restoring Registers

Under any calling convention, one or both of the caller and the callee must preserve register values. Often, linkage conventions use a combination of caller-saves and callee-saves registers. As both the cost of memory operations and the number of registers have risen, the cost of saving and restoring registers at call sites has increased, to the point where it merits careful attention.

In choosing a strategy to save and restore registers, the compiler writer must consider both efficiency and code size. Some processor features impact this choice. Features that spill a portion of the register set can reduce code size. Examples of such features include register windows on the sparc machines, the multiword load and store operations on the Power architectures, and the high-level call operation on the vax. Each offers the compiler a compact way to save and restore some portion of the register set.

While larger register sets can increase the number of registers that the code saves and restores, in general, using these additional registers improves the speed of the resulting code. With fewer registers, the compiler would be forced to generate loads and stores throughout the code; with more registers, many of these spills occur only at a call site. (The larger register set should reduce the total number of spills in the code.) The concentration of saves and restores at call sites presents the compiler with opportunities to handle them in better ways than it might if they were spread across an entire procedure.

- ■

-

Using multi-register memory operations When saving and restoring adjacent registers, the compiler can use a multiregister memory operation. Many isas support doubleword and quadword load and store operations. Using these operations can reduce code size; it may also improve execution speed. Generalized multiregister memory operations can have the same effect.

- ■

-

Using a library routine As the number of registers grows, the precall and postreturn sequences both grow. The compiler writer can replace the sequence of individual memory operations with a call to a compiler-supplied save or restore routine. Done across all calls, this strategy can produce a significant savings in code size. Since the save and restore routines are known only to the compiler, they can use minimal call sequence to keep the runtime cost low.

The save and restore routines can take an argument that specifies which registers must be preserved. It may be worthwhile to generate optimized versions for common cases, such as preserving all the caller-saves or callee-saves registers.

- ■

-

Combining responsibilities To further reduce overhead, the compiler might combine the work for caller-saves and callee-saves registers. In this scheme, the caller passes a value to the callee that specifies which registers it must save. The callee adds the registers it must save to the value and calls the appropriate compiler-provided save routine. The epilogue passes the same value to the restore routine so that it can reload the needed registers. This approach limits the overhead to one call to save registers and one to restore them. It separates responsibility (caller saves versus callee saves) from the cost to call the routine.

The compiler writer must pay close attention to the implications of the various options on code size and runtime speed. The code should use the fastest operations for saves and restores. This requires a close look at the costs of single-register and multiregister operations on the target architecture. Using library routines to perform saves and restores can save space; careful implementation of those library routines may mitigate the added cost of invoking them.

Section Review

The code generated for procedure calls is split between the caller and the callee, and between the four pieces of the linkage sequence (prologue, epilogue, precall, and postreturn). The compiler coordinates the code in these multiple locations to implement the linkage convention, as discussed in Chapter 6. Language rules and parameter binding conventions dictate the order of evaluation and the style of evaluation for actual parameters. System-wide conventions determine responsibility for saving and restoring registers.

Compiler writers pay particular attention to the implementation of procedure calls because the opportunities are difficult for general optimization techniques (see Chapters 8 and 10Chapter 8Chapter 10) to discover. The many-to-one nature of the caller-callee relationship complicates analysis and transformation, as does the distributed nature of the cooperating code sequences. Equally important, minor deviations from the defined linkage convention can cause incompatibilities in code compiled with different compilers.

Review Questions

- 1.

-

When a procedure saves registers, either callee-saves registers in its prologue or caller-saves registers in a precall sequence, where should it save those registers? Are all of the registers saved for some call stored in the same ar?

- 2.

-

In some situations, the compiler must create a storage location to hold the value of a call-by-reference parameter. What kinds of parameters may not have their own storage locations? What actions might be required in the precall and postcall sequences to handle these actual parameters correctly?

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780120884780000074

HSA Memory Model

L. Howes, … B.R. Gaster, in Heterogeneous System Architecture, 2016

5.3.2 Background: Conflicts and Races

In an execution, two memory operations, let’s call them C1 and C2, “conflict” if they access the same location, and at least one is a write. If C1 and C2 are from different threads, then we say that C1 and C2 “race,” because we cannot always tell whether C1 or C2 will occur first. For example, in the following program, we cannot say for sure if location A will be 1 or 2 when the program completes because we do not know how the threads will appear to interleave.

{{{

A = 1;

}}}

|

{{{

A = 2;

}}}

Races can be classified based on whether or not they are “synchronized.” A “memory race” occurs when conflicts have been synchronized (e.g., they occur in a critical section protected by a lock). Memory races are typically benign, because the synchronization will ensure they always execute in the correct order. A “synchronization race” occurs when the conflicting operations are part of a synchronization primitive (e.g., the compare-and-set that implements a lock above). Synchronization races are also benign, because they are designed to enforce an order of the operations they protect.

A “data race” occurs when conflicts have not been synchronized and are not part of a synchronization primitive. Data races are often harmful and unintentional in a program, because they lead to unpredictable behavior.

We say an execution is “data-race-free,” if it does not contain any data races. We say that a program is data-race-free if all executions of that program are data-race-free. The data-race-free classification is important for the HSA memory model, as it joins a growing list of platforms, including C++, Java, and OpenCL, that define memory consistency in terms of data-race-free executions.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128003862000043

Thread communication and synchronization on massively parallel GPUs

T. Ta, … B. Jang, in Advances in GPU Research and Practice, 2017

4.2.1 Relationships between two memory operations

OpenCL 2.0 defines the following relationships between two memory operations in a single thread or multiple threads. They can be applied to operations on either global or local memory.

- •

-

Sequenced-before relationship. In a single thread (or unit of execution), a memory operation A is sequenced before a memory operation B if A is executed before B. All effects made by the execution of A in memory are visible to operation B. If the sequenced-before relationship between A and B is not defined, A and B are neither sequenced nor ordered [7]. This defines a relationship between any two memory operations in a single thread and how the effects made by one operation are visible to the other (Fig. 8).

Fig. 8. Sequenced—before relationship.

- •

-

Synchronizes-with relationship. When two memory operations are from different threads, they need to be executed in a particular order by the memory system. Given two memory operations A and B in two different threads, A synchronizes with B if A is executed before B. Hence all effects made by A in shared memory are also visible to B [7] (Fig. 9).

Fig. 9. Synchronizes—with relationship.

- •

-

Happens-before relationship. Given two memory operations A and B, A happens before B if

- –

-

A is sequenced before B, or

- –

-

A synchronizes with B, or

- –

-

For some operation C, A happens before C, and C happens before B [7] (Fig. 10).

Fig. 10. Happens—before relationship.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128037386000033

Atomic Types and Operations

Victor Alessandrini, in Shared Memory Application Programming, 2016

8.4.5 Consume Instead of Acquire Semantics

The consume option is an optimized version of the acquire. When an atomic load operation takes place:

- •

-

If acquire semantics is used, no memory operation following the load can be moved before it.

- •

-

If consume semantics is used, the previous memory order constraint only applies to operations following the load that have computational dependence on the value read by the load. This more relaxed requirement reduces the constraints imposed on the compiler, who can now reorder memory operations he could not reorder before, which may result in enhanced performance.

The consume semantics is typically used with atomic pointers. Listing 8.6 shows an example in which a thread transfers to another thread the value of a shared double.

Listing 8.6. “Happens before” with consume-release

In this case, the only “happens before” relation that holds is the one between the initialization of d in thread 1, and the retrieval of is value by the load operation on P in thread 2.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128037614000083

Compiler Technology

W.-H. Chung, … W.-M. W. Hwu, in Heterogeneous System Architecture, 2016

7.8 Memory Segment Annotation

As we stated in the previous section, in HSAIL kernels, each memory operation must be associated with a memory segment. This indicates which memory region that a memory operation will commence. The notion of memory segments is a fundamental feature of HSA. This feature, however, is typically missing from high-level language such as C++ AMP. High-level languages put data into a single generic address space, and there is no need to indicate address space explicitly. To resolve this discrepancy, a special transformation is required to append correct memory segment designation for each memory operation.

In LLVM terminology, “address space” has the same meaning as “memory segment” in HSA. In the Kalmar compiler, after LLVM bitcode is generated, the generated code goes through a LLVM transformation pass to decide and promote (i.e., add type qualifiers to) declaration to the right address space. In theory, it is almost impossible to conclusively deduce address space for each declaration, because the analyzer lacks the global view that identifies how the kernels interact with each other. However, there are clues we can use to deduce correct address space in practical programs.

The implementation of array and array_view provides a hint to deduce correct address space. In C++ AMP, the only way to pass bulk data to kernels is to wrap them by array and array_view. C++ AMP runtime will register the underlying pointer with HSA runtime. Those data will be used in kernel by accessing the corresponding pointer on the argument of kernel function. Those pointers, as a result, should be qualified as in a global segment, because the data pointed to by them reside in host memory and are visible among all work-items in the grid. The pass will iterate through all the arguments of the kernel function, promote pointers to a global memory segment, and update all the memory operations associated with that pointer.

The tile_static data declarations cannot be deduced simply by data flow analysis, so they need to be preserved from the Kalmar compiler front end. In the current implementation, declarations with tile_static are placed into a special section in the generated bitcode. The LLVM transformation will identify it and mark all the memory operations with the correct address space.

Let us use a tiny C++ AMP code example to illustrate this annotation process (Figure 7.9).

Figure 7.9. C++ AMP code to demonstrate memory segment annotation.

After the Kalmar compiler front end, the code is transformed to pure LLVM IR (shown in Figure 7.10). There is no address space annotation in this version, and it will produce incorrect results. Notice that at the top of Figure 7.10, variable tmp is placed into a special ELF section (clamp_opencl_local). This section name is inherited from an original implementation of C++ AMP based on OpenCL from MulticoreWare. The section is shared between the OpenCL-based and HSAIL-based C++ AMP implementations, as the nature of the variables placed in this section are compatible between these two implementations.

Figure 7.10. LLVM IR produced for the kernel in Figure 7.9, before memory segment annotation.

Note that Line 6 in Figure 7.10 corresponds to the assignment “tmp[id] = 5566” in Figure 7.9. However, there is no annotation that this store instruction is directed to the address space of tmp[id]. Similarly, Line 8 corresponds to the part of the statement “p[id] = tmp[id]” that reads tmp[id]. There is also no indication that this load accesses the address space of tmp[id].

After processing by a special LLVM pass, the correct address spaces are deduced and appended to the associated memory operations. The store instruction to and load instruction from tmp[id] are annotated with addrspace(3), which is mapped to the group memory segment of HSA. The generated code can now be executed correctly, as illustrated in the following refined LLVM IR (Figure 7.11).

Figure 7.11. LLVM IR produced for the kernel in Figure 7.9, after memory segment annotation.

After all address space qualifiers are specified, HSAIL compiler can take this LLVM bitcode and emit HSAIL memory instructions with correct memory segments.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128003862000067

Accurately modeling GPGPU frequency scaling with the CRISP performance model

R. Nath, D. Tullsen, in Advances in GPU Research and Practice, 2017

3.3 Compute/Store Path Portion

The compute/store critical path includes computation (TCSPComp) that is not overlapped with memory operations and simply scales with frequency, plus store stalls (TCSPStall). SMs have a wide SIMD unit (eg, 32 in Fermi). A SIMD store instruction may result in 32 individual scalar memory store operations if uncoalesced (eg, A[tid * 64] = 0). As a result, the LSQ may overflow very quickly during a store-dominant phase in the running kernel. Eventually, the level 1 cache runs out of miss status holding register (MSHR) entries, and the instruction scheduler stalls due to the scarcity of free entries in the LSQ or MSHR. We observe this phenomenon in several of our application kernels, as well as microbenchmarks we used to fine-tune our models. Again, this is a rare case for CPUs, but easily generated on an SIMD core. Although the store stalls are memory delays, they do not remain static as frequency changes. As frequency slows, the rate (as seen by the memory hierarchy) of the store generation slows, making it easier for the LSQ and the memory hierarchy to keep up. A simple model (meaning one that requires minimal hardware to track) that works extremely well empirically assumes that the frequency of stores decreases in response to the slowdown of nonoverlapped computation (recall, the overlapped computation may also generate stores, but those stores do not contribute to the stall being considered here). Thus we express the CSP by the following equation in the presence of DVFS.

(5)TCSP(t)=maxTCSPComp+TCSPStall,f1f2×TCSPComp.

We see this phenomenon, but only slightly, in Fig. 11, but much more clearly in Fig. 12, which shows one particular store-bound kernel in cfd. As frequency decreases, computation expands but does not actually increase total execution time; it only decreases the contribution of store stalls.

Fig. 12. Runtime of the cfd kernel at different frequencies.

We assume that the stretched overlapped computation and the pure computation will serialize at a lower frequency. This is a simplification, and a portion of CRISP’s prediction error stems from this assumption. This was a conscious choice to trade accuracy for complexity, because tracking those dependencies between instructions is another level of complexity and tracking.

In summary, then, CRISP divides all cycles into four distinct categories: (1) pure computation, which is completely elastic with frequency, (2) load stall, which as part of the LCP is inelastic with frequency, (3) overlapped computation, which is elastic, but potentially hidden by the LCP, and (4) store stall, which tends to disappear as the pure computation stretches.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128037386000185

MEMORY OPERATIONS

The memory unit supports two basic operations: read and write. The read operation reads

previously stored data and the write operation stores a new value in memory. Both of these

operations require a memory address. In addition, the write operation requires specification

of the data to be written.

The fetch-execute cycle

The basic operation of a computer is called the ‘fetch-execute’ cycle. The CPU is

designed to understand a set of instructions — the instruction set. It fetches the instructions

from the main memory and executes them. This is done repeatedly from when the computer

is booted up to when it is shut down.

1. The CPU fetches the instructions one at a time from the main memory into the

registers. One register is the program counter (pc). The pc holds the memory

address of the next instruction to be fetched from main memory.

2. The CPU decodes the instruction.

3. The CPU executes the instruction.

4. Repeat until there are no more instructions.

Example #1

What is memory? Is it a concrete and tangible atom, which, once stored, can be retrieved by a methodical search or can it get lost in the murky recesses of our minds? Are our memories stored in separate areas or as part of one whole? In everyday conversation we regularly refer to memory in ways which suggest it to contain selective processes – ‘I have a terrible memory for names’, or ‘I never forget a face’ but is it really the case that our memory has specialized systems for the recall of certain information, or is it that memory is, in fact, a single system? Any discussion of the way in which we store information that can be accessed at a later date must address the issues raised here.

To look first at an overview of the architecture of our memories, we are probably all familiar with the idea of a short term and a long term memory. These two oft-used terms, along with the sensory memory, combine to make up the modal model. Several theorists such as Waugh & Newman (1965) have proposed a model in which we have these three separate systems, arranged in a hierarchy.

|

Writing service |

Conditions |

Website |

|

[Rated 96/100] |

Prices start at $12 Payment methods: VISA, MasterCard, American Express |

Hire Writer |

|

[Rated 94/100] |

Prices start at $11 Payment methods: VISA, MasterCard, American Express, Discover |

Hire Writer DISCOUNT 10% |

|

[Rated 91/100] |

Prices start at $12 Payment methods: VISA, MasterCard, JCB, Discover |

Hire Writer |

Sensory stores feed into a phonetically coded short term memory via selective attention, and information then moves to a semantically coded long term memory. We will now look at each of these modules in turn and examine the ideas and evidence underlying each.

The sensory memory is proposed to be the interface with the outside world. Information is received from each of our senses (a separate, modality-specific store is suggested for each sense) and held in a buffer which very quickly decays and is updated. There is good evidence for the existence of the brief storage of a verbatim copy of sensory input in several sensory modalities.

The two most studied are in the visual and auditory modalities, dubbed by Neisser ‘iconic’ and ‘echoic’ memory respectively. Sperling (1960) provided some of the most striking evidence to date when testing recall of a briefly shown (50 msec) 3*4 grid of letters. The results were that typically, 4 letters were recalled.

However, if given an auditory cue to focus on one row, 3 of the 4 letters in that row were recalled, suggesting that the amount of information available a fraction of a second after the stimulus had been shown was a great deal more than was available a second or so later. Indeed, further similar experiments have suggested that the iconic store decays within about 0.5 sec.

Treisman (1964) and Darwin, Turvey, and Crowder (1972) have investigated echoic memory using similar experiments and have found a similar decay effect, though in the auditory modality this appears to be of the order of around two seconds. Such experiments as outlined above seem to provide good evidence for the existence of a sensory store which is quite separate from short term and long term memories and suggests that the human memory is modular at least in this respect. The existence of a sensory store also has great intuitive appeal when we consider the possible uses of such a system.

Sensory memory appears to be preattentive and this seems a useful facility that could be held briefly to provide a steady input for later, conscious processing. Using this line of reasoning it could then be argued that sensory memory is in fact a by-product of sensory processing rather than a memory system in its own right. Whilst this part of memory seems intuitively and experimentally to fit within a modular framework the dichotomy between short term and long term memory is a more contentious area.

The arguments forwarded by advocates of the stores’ models suggest that some information from the sensory store will be particularly useful to the organism and will, therefore, be attended to for further processing, and will thus find it’s way into the short term memory. This system is thought to be of limited capacity and is fragile – the information can easily be lost if distracted.

Experiments have been conducted to investigate the capacity of the short term store, Miller (1956) concluding that it’s span (calculated from the rote recall of a series of random digits) is 7 +/- 2. If information is to be stored for a longer period of time it is passed to the long term store, of which there are no known limits. Atkinson & Shiffrin (1971) have suggested that the transfer between the two stores is facilitated by the rehearsal of the information and that the greater the rehearsal, the stronger the storage of the memory.

Several phenomena have been proposed as evidence for separate systems of long term and short term memory, the main two categories can be summarised as follows: pathological evidence from brain-damaged subjects who exhibit memory disorders and psychological evidence from normal subjects. To consider first the evidence from normal subjects, one observation that seems to lend support to the modularity argument comes from the serial recall test. Subjects repeating a list of words tend to exhibit primacy and recency effects, that is they remember best the first and last few words.

It is argued that the recency effect is a product of the short term memory and that the primacy effect is a consequence of long term memory. Further support comes from the fact that these two effects can be manipulated independently, by for example making the subjects count backward for 10 sec after hearing a list of digit strings, which eliminates the recency effect, e.g. (Glanzer & Cunitz 1966).

This also shows the theorized fragility of short term memory and it’s susceptibility to distraction. Evidence for STM/LTM distinctions come from neuropsychology in the form of brain-damaged subjects who exhibit differing levels of amnesia. Brain damage lends itself to two explanations for the poor performance of certain tasks. Mass action or equipotentiality suggests that removing/damaging equal amounts of two brains would lead to equal impairment of the performance, and this view gains some support from Lashley’s studies of lesions in rat’s brains.

Contrarily, a modularity theory suggesting domain specificity of the brain would anticipate that damaging different parts of the brains would lead to impairments of different tasks. Whilst it could be argued that these particular tasks are simply very demanding on resources and are thus performed badly if a double dissociation can be found between two subjects, that is, if one amnesic subject performs well on task A but poorly on task B whilst another subject exhibits the opposite then it would seem a fair assumption that there were indeed two processes for the two tasks. In practice, just such double dissociations have been found and purported to be evidence of modularity.

Patients suffering from Korsakoff’s syndrome (amnesia through chronic alcohol abuse) exhibit poor long term memory whilst having a seemingly good short term memory. For example, they are well able to hold normal conversations (where it is essential to remember what the other person has just said) and experiments have shown them to have normal intelligence on the WAIS, normal digit spans and also to exhibit normal recency effects but impaired primacy effects (Baddeley & Warrington 1970).

These findings suggest a normally functioning short term memory in the face of poor long term memory. Less common are patients who have normal long term recall but impaired short term recall, although cases have been found. Shallice & Warrington (1970) report the case of KF who had no difficulty with long term learning but whose digit span was grossly impaired and who only had a very small recency effect.

Whilst the evidence here does seem to back up the modularity of STM and LTM, many criticisms have been leveled at these theories, often highlighting the over-simplification of their explanations of unitary and uniform storage in each store. Experimental information has suggested at least two different long term stores, e.g. episodic and semantic memory (Tulving, 1972). Problems also arise when we consider that patients have been found with normal LTM but impaired STM, which seems in conflict with the idea that long term store is achieved via short term store.

These problems have lead researchers such as Baddeley & Hitch (1974) and revised and updated by Baddeley (1986) to propose a modular theory in which the internal structure of the STM is revised and elaborated upon. Baddeley proposed that the STM consists of a central executive (a processor which is not expanded on in much depth) which utilizes a key element of the STM; the ‘phonological loop’. This can be used as a store for visually or phonetically coded information while the STM is working and was hypothesized as a direct result of neuropsychological evidence (Shallice & Warrington 1974) which refuted Baddeley’s earlier attempts to revise the modal theory.

This revised working memory model accounts for and is supported by psychological and pathological findings. With a system of such complexity as the memory, it is very difficult to isolate individual functions and phenomena. Whilst the human brain does seem to exhibit some physical modularity, the definite proof is very hard to obtain, though progress is being made through the study of neuropsychology in brains that function abnormally.

The commonality of memory dysfunction from similar damaged brain regions does strongly suggest that human memory is to some extent organized in a modular fashion. Specific memory impairments, for example in face recognition, suggest that the brain has specialized functions for remembering certain classes of stimuli, and it does not seem intuitively unreasonable that it should also have a physically modular structure. (approx 2,000 words) BibliographyBaddeley, A.D. Human Memory: Theory and Practice. Lawrence Erlbaum, 1990. Eysenck, M.W. & Keane M.T. Cognitive Psychology. Lawrence Erlbaum, 1991. Mayes, A.R. Human Organic Memory Disorders. Cambridge, 1988. Parkin, A.J. Memory, and Amnesia. Basil Blackwell, 1987.

Example #2

Memory is the process of storing and retrieving information in the brain. Memory is viewed as a three-step process, which includes sensory memory, short-term memory, and long-term memory. Along with memory there is also forgetting. There are two types of forgetting, availability and accessibility.

Sensory memory is a memory that continues the sensation of a stimulus after that stimulus ends. The two major types of sensory memory are iconic and echoic. Iconic memory occurs when a visual stimulus produces a brief memory trace. This is called an icon. Echoic memory is the brief registration of sounds or echos in memory. A major factor that influences what will be remembered is attention.

Close attention enhances memory by focusing on a limited range of stimuli. The capacity for sensory memory is very small. The duration for icons lasts about a half of a second. Echoes last for around four seconds.

Short-term memory holds information for fairly short intervals. It’s duration ranges from 15 to no more than 30 seconds. The capacity of short-term memory is very limited due to the studies of memory span. The 3 basic processes studied with memory are encoding storage and retrieval. They all have an impact on short-term memory.

Encoding is acoustic, where remembering has to do with acquiring the information in the beginning. It also can be visual. The next aspect is the storage. In order to increase one?s storage ability one must be familiar with chunking. Chunking allows a person to remember more information. By repeating an item over and over again one?s memory can be increased by a method called rehearsal. The third process is retrieval. With retrieval, one can scan memory in order to retrieve items.

Long-term memory is another type of memory where materials are stored for a much longer time. They can be stored as long as a lifetime. Long-term storage is more likely to be achieved when smaller amounts of information are used. The main forms of encoding for long-term memory are semantic, and sometimes visual. There are two basic types of memory, declarative and procedural.

These are two types of memory devoted to facts and skills. Retrieval for long-term memory is dependent on priming by cues. There are also many mnemonic devices that are used to retrieve information from memory.

Two theories of forgetting are availability and accessibility. Availability is information loss. The four theories of this type are trace-decay, disuse, interference, and encoding failure. Trace-decay theory is when there is no trace of memory due to lack of rehearsal. Disuse theory suggests that repeated retrieval of similar information leads to forgetting. Interference theory suggests that what is already in memory competes with newly learned information.

Encoding failure occurs when there is not enough encoding. Accessibility suggests that information is not totally forgotten from-long term memory but may be hard to retrieve. The two major accessibility theories are retrieval failure and motivational. Retrieval theory is where the material cannot be retrieved due to the lack of cues. Motivational theory is where selective forgetting happens in order to reduce anxiety.

For the future, I can now use all of these techniques and strategies to help increase my memory. Making sure I pay close attention to all the lectures and spend more time reviewing my notes will help enhance my study abilities for the first exam. I will attempt to read over and over again the assigned readings. I will try to chunk the new information that I receive. With all of these strategies, I will hopefully be able to do well.

Example #3

Introduction

Evidence suggests that brain memories are not whole; rather, pieces of information stored in different areas of the brain are combined to create memories (Matlin, 2012). This explains why the recalled information is not entirely accurate. Encoding, storage, and recall of skills and facts (semantic memory) or experiences (episodic memory) involve different parts of the brain. This implies that there is a close relationship between memory processes and brain functioning.

Working and Long-term Memories

Over the years, there has been an intense debate on whether working and long-term memories are related. While there are many similarities between the long-term memory (LTM) and working memory (WM), distinct differences also exist between the two. One difference is that the functioning of LTM does not require the activation of WM.

A study by Morgan et al. (2008) revealed that many qualities of LTM such as procedural memory and motor skills do not depend on the working memory. However, episodic memories, which rely on past experiences, may at some point involve the activation of the working memory (Morgan et al., 2008).

Long-term memory has two distinguishing properties; (1) it has no capacity limits and (2) it lacks temporal decay associated with short-term memory (Morgan et al., 2008). In contrast, WM encompasses tasks of short-term memory that demand more attention but are not directly associated with cognitive aptitudes. It is a combination of different memories working together, including some components of the long-term memory, to organize information in the working memory into fewer units in order to reduce the working memory load.

Both WM and LTM are affected by the level of semantic processing or encoding in the brain. LTM is known to be affected by the qualitative depth of initial memory encoding (Matlin, 2012).

For example, it has been established that encoding during semantic processing results in the improved long-term memory of episodic items compared to the recall of visual or phonological items (Morgan et al. 2008). Similarly, since the performance of WM depends on the level of processing at the encoding stage, semantic processing can lead to improved WM.

Memory Formation in the Brain

Stadthagen-Gonzalez and Davis (2010) propose that memory is formed through dendrite-axonal networks, which become more intense with an increase in the number of events stored in the LTM. Stadthagen-Gonzalez and Davis (2010) also postulate that memory storage involves different cortical areas of the brain, where the sensory experiences are processed.

The neural (brain) cells involved in memory formation undergo physical changes through new interconnections as cognitive and perceptual processes in the brain increase. The synapses (a vast system that connects neurons) are involved in the formation of interconnected memories or neural networks.

It is the neural networks that facilitate the formation of new memories. Karpicke and Roediger (2009) postulate that, through a closely related activity (relayed through similar synapses), a new memory is formed causing changes to the neural circuit to accommodate the new item.

Also, new neurons can be joined to the circuit, if they are correlated with previously formed neural networks (Matlin, 2012). Long-term potential (LTP) is associated with reverberation (depolarization) in the post- and presynaptic neurons during learning. It is induced through prolonged stimulation of synapses during learning. New memories are maintained through repetitive excitation of LTP, which increases the release of neurotransmitters that can persist for several days or even months.

Adaptive Recall and Forgetfulness

Evidence suggests that the amygdala and the hippocampus regions of the brain interact during the formation of verbal and visual memory (Matlin, 2012). However, the amygdala identifies and stores emotionally important information while the hippocampus creates new neural networks for cognitive material.

It is through the amygdala-hippocampus interaction that emotionally important memories are recalled. The same applies to less emotionally significant events, which are less arousing. Thus, personal and emotional experiences are easily recalled than neutral events. It also explains why reinforcements improve memory while damaging to hippocampus and amygdala results in impaired memory functioning.

From an evolutionary standpoint, the neural relationship between the hippocampus and the amygdala is an adaptive response to life experiences. Karpicke and Roediger (2009) suggest that stressful conditions affect the processing and storage of new memories. Also, the retrieval strategies of the hippocampus may be repressed under stressful conditions.

Consequently, it becomes adaptive to remember relevant and emotional memories for survival purposes. Also, through amygdala-hippocampus interaction, it becomes adaptive to forget or repress some traumatic or unpleasant memories in order to maintain normal cognitive functioning.

Accuracy of the Memories

Studies have shown that human recollections are often not accurate. This raises questions regarding the extent of the accuracy of the memory. Unsworth and Engle (2011) demonstrate that the hippocampus-amygdala interaction is essential in memory encoding and retrieval, with the amygdala regulating information encoding, storage, and recall from the hippocampus.

Thus, for some time, the recall accuracy of emotionally arousing events is high compared to neutral ones. Evidence also suggests that physiological changes in the level of arousal affect the way memories are replayed. For instance, Unsworth and Engle (2011) show that, at the encoding stage, the level of activation of the amygdala influence memory retention while its damage impairs memory arousal. This highlights the fact that emotional arousal enhances memory accuracy, at least in the short-term.

Memory Aids for Memory Impaired Individuals

Memory impairment or loss may have a number of causes, including neurological diseases, aging, trauma, stroke, or brain injury. Individuals suffering from poor memory, amnesia, and PSTD can benefit from memory aids that enhance their memory. Prospective memory (PM) aids can help such people to recall essential actions in their daily lives (Matlin, 2012). They are normally external aids that facilitate semantic memory or systems that allow caregivers to monitor the cognitive functioning of patients with memory problems.

Karpicke and Roediger (2009) group memory support systems into three; assurance systems that monitor a person’s cognitive health at home or care setting; compensation systems, which involve functionalities that accommodate the user’s memory impairments; and assessment systems, which are technologies that continuously monitor the cognitive status of users under rehabilitative care.

Developers of these systems rely on the knowledge regarding the functioning of the brain and memory encoding processes to make memory aids. Also, understanding the type of memory affected can help in the treatment of the individual through psychoanalysis.

Example #4

Memory is defined as the accuracy and ease with which a person can retain and recall past experiences (Webster’s Dictionary, pg. 611). It is often thought of as a capacity, such as a cup, that could be full or empty. A more common comparison is one to a computer.

Some minds, like computers, can have more ?software?, being able to save and recall more experiences, information, and memories than others can. And like a computer, minds can be upgraded. This is not done with a simple installation of a chip, but by following a number of small procedures that will enhance and sharpen memory. As people age, many people believe that the loss of memory is inevitable.

Once people go over a certain age, they begin to lose their memory and will be thought of as old and forgetful. People who forget things often complain about a bad memory, but in most of these cases, these people never took the time to learn whatever they thought they could remember.

Most scientists believe there is no such thing as a good or bad memory, only good and bad learners. Depending on the amount of attention a person gives something depends on how well a person will remember that fact or event (Reich, pg. 396).

Beginning at the age of 50, people of similar ages begin to differ more and more from one another in their mental performance. Some memories drop noticeably, but many stay the same or even rise. Most investigators agree that no mental decline occurs before the age of 65 or 70 that affects a person’s ability to function in the real world (Schrof, pg. 89).

In many societies still today, such as in China, elders are considered the wisest and are very well respected. There are two types of memories, long and short term. Anything remembered under 30 seconds is considered short-term memory, and anything after that is considered long-term memory (Kasschau, pp. 57-58).

Endell Tulving has broken it down even further into “episodic” and “semantic” memories. Episodic memory is remembering specific events or names. Semantic memory refers to general knowledge, like speaking a language or doing math problems (Corsini, pg. 355).

Many things can be done to increase and keep a person’s memory sharp. Seeking variety provides a broad range of experiences that provide reservoirs of knowledge to search through in old age. A willingness to try new things and improvise give that mind more experience.

People who are at peace and find life fulfilling have a memory that is stronger and lasts longer than those who are often angry or depressed do. Strengthening a memory can start during childhood. Eating right as a baby leads to strong, healthy brains, while nutritional deficits can permanently impair mental functions.

Getting lots of stimulation and staying in school are two ways to make your memory last longer. Enriched environments cause brain cells to grow as much as 25 percent more than those in bland environments (Schrof, pg. 91). When a person reaches young adulthood, making many friends can keep a person sharp. People with many friends often score higher cognitive tests and are able to adapt better to new situations. Finding a mentor and marrying someone who is smarter than you help also, leading you to strive to match your mate’s abilities (Schrof, pg. 91).

As a person enters middle age, putting away money for trips can be beneficial. People with extra money can treat themselves to mind nourishing experiences like travel and cultural events. Achieve major life goals now to avoid burnout.

People who head into retirement fulfilled will feel at peace with their accomplishments (Schrof, pg. 91). When a person enters the late sixties, they should search for things that continue to challenge them and intrigue the mind. In other words, do not get bored. Doing things that make you feel like you are doing something constructive also helps. Those who do not feel like they have no purpose and tend to burn out. Taking a daily half-hour walk can increase your scores on intelligence tests.

Too much exercise at too much of an intense pace hinders the memory (Schrof, pg. 91). Neurologists today are finding that later in life the brain stops producing a hormone involved in the memory process, acetylcholine. So far results have shown that drugs can act like the hormone to recharge the memory. Another method of remembering more is called chunking.

Short-term memory is limited in its duration as well as in its capacity. Your short-term memory can store and retrieve about seven unrelated items. After you already have your immediate memory-filled, attempting to store more will cause confusion. In order to store more information and avoid confusion, grouping items into “chunks” will allow for a person to remember more.

Using the initials of a string of words can minimize three or four unrelated items into one. Items that are often minimized are items such as phone numbers and names of favorite radio stations (Kasschau, pp. 57-58). There are many elderly people who are or have been considered great people with great minds. The late Mother Teresa was considered by all to have a great mind, and she was in her late eighties. Nelson Mandela is also over eighty and is admired by many because of his experiences and mind.

Grandma Moses has to be one of the more popular of old great minds, painting and remembering many of her experiences past her 100th birthday. It seems every day more theories come out about how memory can be improved and kept sharp. Most are simple everyday steps that the majority of people never think about, some are more complicated than the average person will understand. Even today researchers are nowhere near completely understanding memory. With all the continuing study of the memory going on, it is safe to say that much more advice will come out in the future about how to strengthen memory

With the continuing knowledge about memory still coming out, no one knows how much humans will be able to expand the strength of the average memory. With so little of the brain being used at this point in age, maybe in the future more of the brain will be available for use. This would no doubt lead to the expansion of memory. One final comparison that the memory can be made to is a car. Lots of cars break down, but with the right maintenance and tune-ups, many never do.

Example #5

If we think about what life is made up, we can say that memories build a life. We save all the important and happy events that occurred in our lives as well as the saddest and worst moments. It is said that the brain is the most powerful part of humans, but as part of the brain, memory is an essential piece of it.

As I mentioned before, memories build a life, each day we put on practice what we have learned and live. I believe that in life we don’t have anything secure but our memories, once we die we don’t take anything we have right now. When we remember happy moments we have life, it’s like re-living them again and feeling the joy we felt at the moment.

Memory as the Topic of Psychology Class

I decided to choose the memory as the topic of my Psychology class essay because from a while ago I started to have issues with my memory. At first, I thought it was because of the problems that I was going through at the time, and also preparing for college. Time passed and I still was having trouble with remembering stuff, I came to think it was a hereditary health problem because my dad and grandmother never remember something. I feel frustrated because without a memory is like we never have lived, we constantly live through memories. Remembering what we have gone through and manage to take a positive attitude even if the memory brings us negative feelings.

In this essay, I will cover specific topics about memory which is “the retention of information or experience over time as the result of three key processes: encoding, storage, and retrieval”, according to Laura A. King in Experience Psychology. Throughout the essay I will discuss the basic memory process, also I will talk about the different stages of memory as well as the different types of memory, along with the explanations of when the memory fails (forgetting).

In my opinion, these three subjects are a very essential part to understand how memory works and find an explanation of why we forget things, which in my case. But also relate these topics to our daily lives. To begin with, I will explain the process of memory so later on, I can discuss the different possibilities of why we forget.

The first step in the process of memory is called encoding which is the processing information into memory accordingly to a Sparknotes article, Memory. For instance, we might remember where we ate in the morning even if we didn’t try remembering it but on the contrary, it is possible that we are going to be able to remember the material in textbooks we covered during elementary school, high school or even more recent in college. It is stated that in the process of memory encoding, we have to pay attention to the information so we can later recall all the information.

In the content of Memory, the second step in-memory process is storage, the retention of information over time, and how this information is represented in memory (King). In this process is often use the Atkinson-Shiffrin theory that is made up of three separate systems: sensory memory- time frames of a fraction of a second to several seconds, short-term memory- time frames up to 30 seconds and long-term memory- time frames up to lifetime (King). The third and last step of this course is memory retrieval, the process of information getting out of storage.

Sensory or Immediate Memory

Likewise, I will explain the first stage of memory called sensory memory or immediate memory. As stated in Experience Psychology, the sensory memory holds information from the world in its original sensory form for only an instant, not much longer than a brief time. In this stage of memory, the “five” senses are used to hold the information accurately. In Sensory Memory by Luke Mastin, the stimulus that is detected by our senses has two options, it can either be ignored meaning it would go away at the instance or it can be perceived staying in our memory.

As I mentioned before, our senses are being used in the sensory memory stage and have their specific name for example when we perceived the information through our vision it is called iconic memory also referred to as visual sensory memory. The iconic memory holds an image only for about ¼ of a second. Not only but also, we also have the echoic memory which refers to auditory sensory memory, this function is in charge to hold part of what we listen to/hear.

For instance, when the professor is dictating a subject, we are trying to write fast so we can hold on all the information given at the moment and not forget what the professor said. Another stage of memory is called the short-term memory (STM), according to a web article Short-Term Memory, of Luke Bastin, the short-term memory is responsible for storing information temporarily and determining if it will be dismissed or transferred on to our third stage called long-term memory.

Short-term memory sometimes is associated with working memory, which is a newer concept that the British psychologist Alan Baddeley came up with. Although working memory emphasizes the brain’s manipulation and how it collects information so we can easily make decisions as well as solve problems and mostly understand the information. It said that the working memory is not as passive as the short-term memory but both have limited capacity to retained information.

Additionally to this stage, we have the finding of George Miller which wrote in his book called The Magical Number Seven, Plus or Minus Two. In this book, Miller talks about two different situations. The first kind of situation is called absolute judgment which states that a person should correctly differentiate between very similar items such as shades of green and high/low-pitched tones.

Short-Term Memory

The second situation states that a person must recall items presented in a sequence, meaning that a person must retain a certain number of chunks in their short-term memory. King also mentions that to improve short-term memory we consider two ways of doing it, chunking and rehearsal. According to King, chunking involves grouping or packing information that exceeds the 7 ± 2 memory span into higher-order units that can be remembered as single units.

For example, when the professor is dictating a list of things like cold, water, oxygen, air, rain, and snow, we are likely able to recall all words or even better all six words instead of having a list like S IXFL AGSG REATA MERI CA.

When we have a list like that it will be harder to remember it because none of the six chunk words make sense, but if we re-chunk the letters we get “Six Flags Great America”, and that way we have better chance to remember it. The second way to improve our short-term memory is by rehearsal, actually, there are two types of rehearsal, maintenance rehearsal, and elaborative rehearsal.

Maintenance rehearsal is the repeating of things over and over; usually, we use this type of rehearsal. On the other hand, we have the elaborative rehearsal which is organizing, thinking about and linking new material to existing memories.

Long-Term Memory

Continuing with the stages of memory, now I will talk about the third stage which is the long-term memory. In the article, What Is Long-Term Memory? by Kendra Cherry, long-term memory (LTM) refers to the continuing storage of information.

The indifference of the other two stages of memory, LTM memories can last for a couple of days to as long as many years. LTM is divided into types of memory, declarative (explicit) memory, and procedural (implicit) memory. Later on, I will explain in detail what are these two types of memory. Now that I have gone through the three stages of memory which are sensory memory, short-term memory, and long-term memory, I will discuss the different types of memory.

The different types of memory rely on the long-term memory section, the first type of memory that I will talk about is explicit memory also known as declarative memory. This type of memory “is the conscious recollection of information, such as specific facts and events and, at least in humans, information that can be verbally communicated” (Tulving 1989, 2000).

Declarative (Explicit) Memory

Some examples when we use our explicit memory is when we try to remember our phone number, writing a research paper or recalling what time and date is our appointment with our doctor. It said that this process type of memory is one of the most used in our daily lives, as we constantly remember the tasks that we have to do in our day. In another article by Kendra Cherry called Implicit and Explicit Memory, Two Types of Long-Term Memory, informs us about two major subtypes that fall into the explicit memory. One is called episodic memories which are memories of specific episodes of our life such as our high school graduation, our first date, our senior prom, and so on.

The second subsystem of explicit memory is the semantic memory; this type of memory is in charge to recall specific factual information like names, ideas, seasons, days of the month, dates, etc. I can easily remember my quienceañera party, it was May 24, 2008, at this exact moment I can recall what was the first thing I did when I woke up that and also what I did before sleeping but there are episodes on that day that I’m not able to remember.

Procedural (Implicit) Memory

Moreover, I will discuss the second type of memory which is implicit memory. Started by King, implicit memory is the memory in which behavior is affected by prior experience without a conscious recollection of that experience, in other words, things we remember and do without thinking about them. Some examples of our implicit memories are driving a car, typing on a keyboard, brushing our teeth, and singing a familiar song.

Within the implicit memory, we have three subtypes; the first one is the procedural memory that according to King is a type of implicit memory process that involves memory for skills. The procedural memory process basically is the main base of the implicit memory, since all of us unconsciously do many things throughout the day, as I mentioned before driving a car or simply dress ourselves to go to school, work, or wherever we have to go.

The other subtype of implicit memory is the classical conditioning which involves learning a new behavior via the process of association, it is said that two stimuli are linked together to produce a new learned response. For instance, phobias are classical conditioning as the Little Albert Experiment result was. I personally I’m more than afraid to spiders, in other words, my phobia is called arachnophobia which can control and learn to overcome the fear and anxiety it gives me every time I see a spider or even think about a spider.